In a significant development poised to reshape our interaction with artificial intelligence, Google has quietly unveiled a groundbreaking application: the Google AI Edge Gallery. This experimental app allows users to download and run powerful AI models directly on their smartphones, completely offline. Far from just a niche tool, this move signals a profound shift towards “on-device AI” or “edge AI,” promising enhanced privacy, blistering speed, and unparalleled accessibility in the burgeoning world of artificial intelligence.

What is Google AI Edge Gallery and Why Does It Matter?

For years, the promise of advanced AI has largely been tethered to the cloud. Powerful models resided on remote servers, demanding a constant internet connection to process queries and deliver results. While effective, this cloud-centric approach presented inherent limitations: privacy concerns over data transmission, latency issues, and a complete reliance on network availability.

The Google AI Edge Gallery directly addresses these challenges. It’s an Android application (with an iOS version reportedly on the horizon, as noted by various tech news outlets) that empowers users to bring AI models from platforms like Hugging Face, including Google’s own compact yet potent Gemma 3n, onto their personal devices. This development, though initially a quiet rollout, is being closely watched by industry observers for its potential to democratize access to advanced AI capabilities.

The “Why”: Addressing Privacy, Speed, and Offline Needs

- Uncompromised Privacy: Perhaps the most compelling benefit, and one frequently highlighted by privacy advocates, is data privacy. When AI models run locally, your sensitive information—be it text, images, or personal queries—never leaves your device. This eliminates the need to transmit data to third-party cloud servers, significantly reducing the risk of security breaches and giving users greater control over their digital footprint. This is a critical consideration in an era where data security is paramount.

- Blazing Fast Performance: Say goodbye to network lag. With processing happening directly on your smartphone’s processor, responses from the AI are virtually instantaneous. This low latency is crucial for real-time applications, making interactions feel more fluid and natural, a stark contrast to the delays sometimes experienced with cloud-based AI.

- True Offline Accessibility: Imagine generating code, summarizing documents, or creating images with AI even in areas with no Wi-Fi or cellular signal. The AI Edge Gallery makes this a reality, opening up AI capabilities in remote locations, during travel, or in situations where connectivity is unreliable. This expands the utility of AI far beyond well-connected urban environments.

- Reduced Costs: By offloading computation from cloud servers, users can potentially save on data usage and avoid ongoing subscription fees often associated with premium cloud-based AI services. This makes AI more accessible from an economic standpoint.

Key Features and Capabilities

The AI Edge Gallery is designed for both ease of use and developer flexibility:

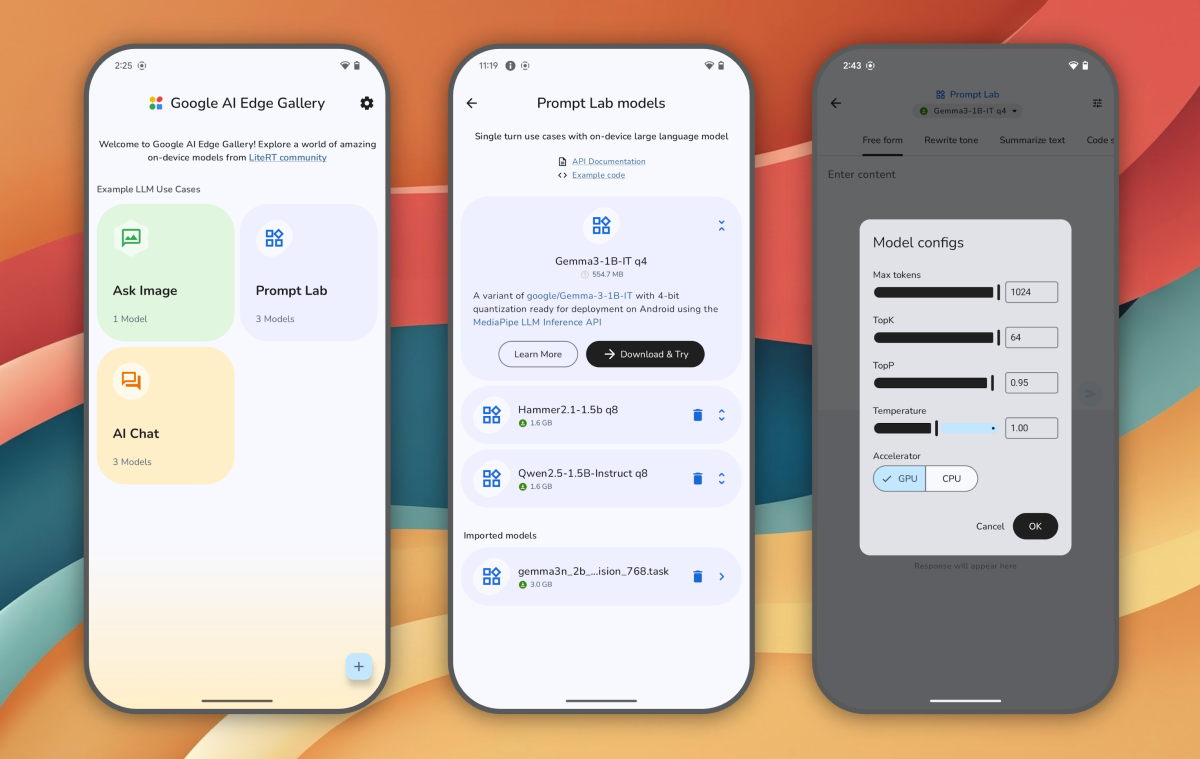

- Model Variety: It supports a range of open AI models, including Google’s Gemma 3 1B, a compact yet powerful language model weighing in at just 529MB, capable of processing thousands of tokens per second. The app also integrates with Hugging Face, a trusted source for open-source AI models, broadening the scope of available functionalities.

- Intuitive Interface: Users can explore features like “AI Chat” for conversational AI, “Ask Image” for image understanding, and a “Prompt Lab” for experimenting with short, single-turn prompts, complete with preset templates and customizable settings. This user-friendly design aims to make advanced AI accessible to a wider audience.

- Open-Source Foundation: Released under the Apache 2.0 license, the app is fully open-source. This decision by Google encourages developers and companies to use, modify, and even integrate it into their commercial products, fostering a dynamic and collaborative ecosystem for mobile AI development.

How to Access Google AI Edge Gallery

As of its initial quiet release, the Google AI Edge Gallery is available as an experimental Alpha release. This means it’s still under active development and testing, and its distribution method reflects this early stage.

Currently, the app is primarily accessible for Android users via GitHub. This typically involves downloading the Application Package Kit (APK) directly from the official GitHub repository and installing it manually on your device. Google provides official user guides on the GitHub page to assist with this process.

While currently exclusive to Android, reports indicate that an iOS version is reportedly on the horizon. This suggests Google’s ambition to bring on-device AI capabilities to a broader mobile audience across different platforms. It’s important for users to note that as an “experimental Alpha” product, performance may vary across devices, and the app may undergo frequent updates and changes.

How Does Local AI Inference Work?

Running complex AI models on a device, rather than a supercomputer in the cloud, is a marvel of modern engineering. It represents a significant leap in optimizing computational power for consumer hardware.

The Technical Underpinnings

At its core, local AI inference involves optimizing large AI models to run efficiently on the constrained hardware of consumer devices like smartphones. Google’s AI Edge Gallery leverages its robust AI Edge platform, which includes foundational technologies like TensorFlow Lite and MediaPipe. These frameworks are specifically designed to:

- Quantize and Compress Models: Reduce the size and computational requirements of AI models significantly without a substantial loss in accuracy. This is crucial for fitting powerful models onto devices with limited storage and memory.

- Optimize for Mobile Hardware: Utilize specialized hardware components like Neural Processing Units (NPUs), Graphics Processing Units (GPUs), or even efficient CPU usage present in modern smartphones to accelerate AI computations. This ensures that the device can handle the intensive processing demands.

- Efficient Data Handling: Process data streams locally to minimize energy consumption and maximize speed, preventing the device from overheating or rapidly draining its battery.

System Requirements and Performance Considerations

While the app aims for broad accessibility, performance can vary greatly depending on the device. Newer, higher-end smartphones with dedicated AI accelerators (NPUs) and ample RAM will naturally offer a smoother and faster experience, especially with larger models. Older or mid-range devices might still run lighter models effectively, but users might experience slower inference times or increased battery drain with more demanding tasks. Google itself notes that performance may vary, advising users with less powerful devices to opt for smaller models for optimal results.

Use Cases and Potential Impact

The implications of widespread on-device AI are vast, touching various aspects of our digital lives and reshaping industries.

For Developers and Researchers

- Rapid Prototyping: Developers can quickly test and iterate on AI models without incurring cloud computing costs or dealing with network latency, accelerating the development cycle.

- Edge-Native Applications: It paves the way for a new generation of applications that are inherently private, responsive, and functional even without internet connectivity, opening up new markets and use cases.

- Democratization of AI: By making powerful models accessible on consumer hardware, it significantly lowers the barrier to entry for AI development and experimentation, fostering innovation from a broader community.

For Everyday Users

- Enhanced Privacy for Sensitive Tasks: Imagine using AI for medical queries, financial advice, or personal journaling with the assurance that your data remains entirely private. This builds trust and encourages more personal use of AI.

- AI Everywhere, Anytime: From intelligent assistants that work on a remote hiking trail to real-time language translation in areas with no signal, AI becomes truly ubiquitous. This expands the utility of AI to previously inaccessible scenarios.

- Creative Freedom: Generate images, write stories, or compose music on the go, without worrying about internet availability or data caps. This empowers users with creative tools at their fingertips.

Implications for Enterprise and Digital Marketing

Businesses handling highly sensitive data (e.g., healthcare, finance, legal) can leverage local AI for processing confidential information securely on-premises or on employee devices, adhering to strict compliance regulations. This reduces the attack surface associated with cloud data transfers and storage.

In the realm of digital marketing, the rise of on-device AI could lead to more personalized and immediate user experiences. Imagine AI assistants on your phone providing instant, context-aware recommendations without sending your data to a server. This could also impact search engine optimization, as local AI might influence how users discover and interact with information, potentially affecting strategies related to featured snippets and position zero SEO or even answer engine optimization. The broader landscape of AI tools in digital marketing and the ultimate guide to AI in digital marketing will undoubtedly evolve with these new capabilities.

The Broader Trend: Local AI is Here to Stay

Google’s quiet release of the AI Edge Gallery is not an isolated phenomenon but a strong indicator of a burgeoning trend: the shift towards edge AI and local inference. This movement is gaining significant momentum across the tech industry.

Edge AI and the Future of Computing

Edge AI refers to the deployment of AI algorithms directly on local “edge” devices—smartphones, IoT sensors, industrial machinery, autonomous vehicles—rather than relying solely on centralized cloud servers. This paradigm is driven by several compelling factors:

- Exploding Data Volumes: The sheer amount of data generated by connected devices makes it impractical and costly to send everything to the cloud for processing. Processing data closer to its source is more efficient.

- Real-time Requirements: Many modern applications, from self-driving cars to smart factory automation, demand immediate decision-making that cloud latency cannot always provide. Edge AI enables instantaneous responses.

- Resilience: Edge AI systems can operate independently, even if network connectivity to the cloud is interrupted, ensuring continuous functionality in critical applications.

By bringing AI capabilities closer to the data source, edge computing reduces bandwidth consumption, enhances security, and enables faster, more reliable AI applications. Industry experts and analysts predict that by 2027, edge AI will be integrated into a significant majority of edge devices, becoming a cornerstone of future computing architectures.

Challenges and Opportunities

While the benefits are clear, the path to widespread local AI isn’t without its hurdles:

- Hardware Limitations: Optimizing complex models for diverse and often resource-constrained hardware remains a significant engineering challenge.

- Energy Consumption: Running intensive AI tasks locally can lead to increased battery drain and device heating, requiring innovative solutions for power management.

- Model Updates and Management: Keeping local models updated and managing their versions across many distributed devices can be more complex than centralized cloud deployments, necessitating robust deployment strategies.

However, these challenges also present immense opportunities for innovation in hardware design, software optimization, and decentralized AI management solutions, pushing the boundaries of what’s possible with AI.

Conclusion

Google’s quiet release of the AI Edge Gallery is anything but minor. It’s a powerful statement about the future of artificial intelligence—a future where AI is not just a cloud service but a personal, private, and always-available companion residing directly in our pockets. As this technology matures, we can expect a profound impact on everything from personal productivity and creativity to enterprise security and the very architecture of computing, making AI truly ubiquitous and empowering for everyone. The era of on-device AI has truly begun, promising a more private, faster, and universally accessible artificial intelligence experience.